Sunday Reads #170: Lemon markets, dark forests, and a firehose of malicious garbage.

Four hypotheses for the Internet of tomorrow.

Hey there!

Hope you’re having a great weekend. And Happy Chinese New Year to those who celebrate.

I got some great responses to last week's email, Ghost in the Shell.

You guys are doing some very interesting things with ChatGPT! (Whenever you can get it to load, of course. OpenAI is perpetually running short of server capacity).

For example: One of my friends has started using ChatGPT to write his talks. He gets 80% done in 15 minutes. He outlines what he wants to say (transcribes a voice note through Otter.ai), and pastes it into ChatGPT. Which then proceeds to write the talk for him.

Mindblowing. 👏👏

And someone also shared this with me:

It’s become so easy to create content now!

It got me thinking...

1. A world where content is so, so easy to create... what does that world look like?

One thing I’ve been saying often is: when it's 10x easier to fake it than to make it, fakes will always outnumber the truth.

We saw it in the crypto summer of 2021, when all you needed was to create a token and you'd get mass adoption. A paper found that 98% of tokens launched on Uniswap were scams.

The general principle is:

When it's easy to showcase a veneer of "work" without doing the work itself, then 99% of the work you see will not be real.

When it's easy to generate content without writing it yourself, then 99% of content will be AI-generated.

And if 99% of content is AI-generated, you're better off assuming that 100% is AI-generated.

When you see any content online, the default assumption will be: this has been written by an AI.

This won’t happen tomorrow. It might not happen for the next three years. But inevitably, it will happen.

The Internet will become "a market for lemons".

What is "a market for lemons"?

"A market for lemons" is a thought experiment that shows how a market degrades in the presence of information asymmetry.

From Wikipedia:

Suppose buyers cannot distinguish between a high-quality car (a "peach") and a "lemon". Then they are only willing to pay a fixed price for a car that averages the value of a "peach" and "lemon" together.

But sellers know whether they hold a peach or a lemon. Given the fixed price at which buyers will buy, sellers will sell only when they hold "lemons". And they will leave the market when they hold "peaches" (as the value of a good car as per the seller will be higher than what the buyer is willing to pay).

Eventually, as enough sellers of "peaches" leave the market, the average willingness-to-pay of buyers will decrease (since the average quality of cars on the market decreased), leading even more sellers of high-quality cars to leave the market through a positive feedback loop.

Thus the uninformed buyer's price creates an adverse selection problem that drives the high-quality cars out of the market.

This is how a market collapses.

Soon, everything that's for sale is garbage. Nobody has any incentive to put anything other than garbage up for sale. Why would they, when they cannot prove that they're selling the real thing?

This, by the way, is exactly why 98% of crypto projects are rug pulls. As Matt Levine says in You get the Crypto Rules you want:

Facebook Inc. (now Meta Platforms Inc.) announced in 2019 with enormous fanfare that it was going to launch a stablecoin and work closely with all of the relevant regulators blah blah blah, and it went to the Federal Reserve and said “what do we need to do to launch a stablecoin,” and the Fed said “you must bring me the egg of a dragon and the tears of a unicorn,” and now the Facebook stablecoin is shutting down. One of the largest companies in the world devoted millions of dollars to figuring out how to launch a stablecoin and concluded that it was impossible. It is demonstrably not impossible! Tether did it! Tether has a hugely successful stablecoin! Tether does not care at all about working closely with all of the relevant regulators! That’s why!

…“The revolution needs rules,” some crypto guys say, and they get so many rules. “We don’t need to follow any rules,” some other crypto guys say, and they’re also right.

It's too expensive / impossible to do things the right way in crypto. So the only ones who launch projects are the shady ones.

Coming back to generative AI, what we see will be similar:

As instant "fake content" becomes more and more like "real content" that takes hours to painstakingly produce, the outcome is clear:

The Internet will become, slowly and then suddenly, completely fake.

It will become a market for lemons.

So what does this mean for how we use the Internet?

Don't believe what you see.

Lars Doucet talks about this in AI: Market for Lemons and the Great Logging Off

The internet gets clogged with piles of semi-intelligent spam, breaking the default assumption that the "person" you're talking to is human.

The default assumption will be that anything you see is fake.

You think this is hyperbole? You don't think this can happen?

Well, then ask yourself: When did you last pick up a phone call from an unknown number?

20 years ago, you'd pick up a call from any number. It was almost always a real person, whom you wanted to speak to or who had something useful to tell you.

Today, an unknown number is always a robocaller, a scammer, or a telemarketer. You really really don't want to speak to them.

Why won't the same thing happen with the Internet?

Look, Google search is already broken. The first 10 results for any search are either ads or SEO-hyper-optimized word salads.

What happens when these SEO-hyper-optimized word salads become 100x easier to create? As Tyler Cowen says, solve for the equilibrium.

Back to Doucet's article:

Up until now, all forms of spam, catfishing, social engineering, forum brigading, etc, have more or less been bottlenecked by the capabilities and energy of individual human beings. Sure, you can automate spam, but typically only by duplicating a rote message, which becomes easy to spot. There's always been a hard tradeoff between *quantity* and *quality* of the sort of operation you want to run.

With AI chatbots, not only can you effortlessly spin up a bunch of unique and differentiated messages, but they can also *respond dynamically* as if they were a person.

The silver lining may be (and I may be clutching at straws here) that our parents finally, finally, stop believing everything they read on WhatsApp.

But this isn't the end of the story.

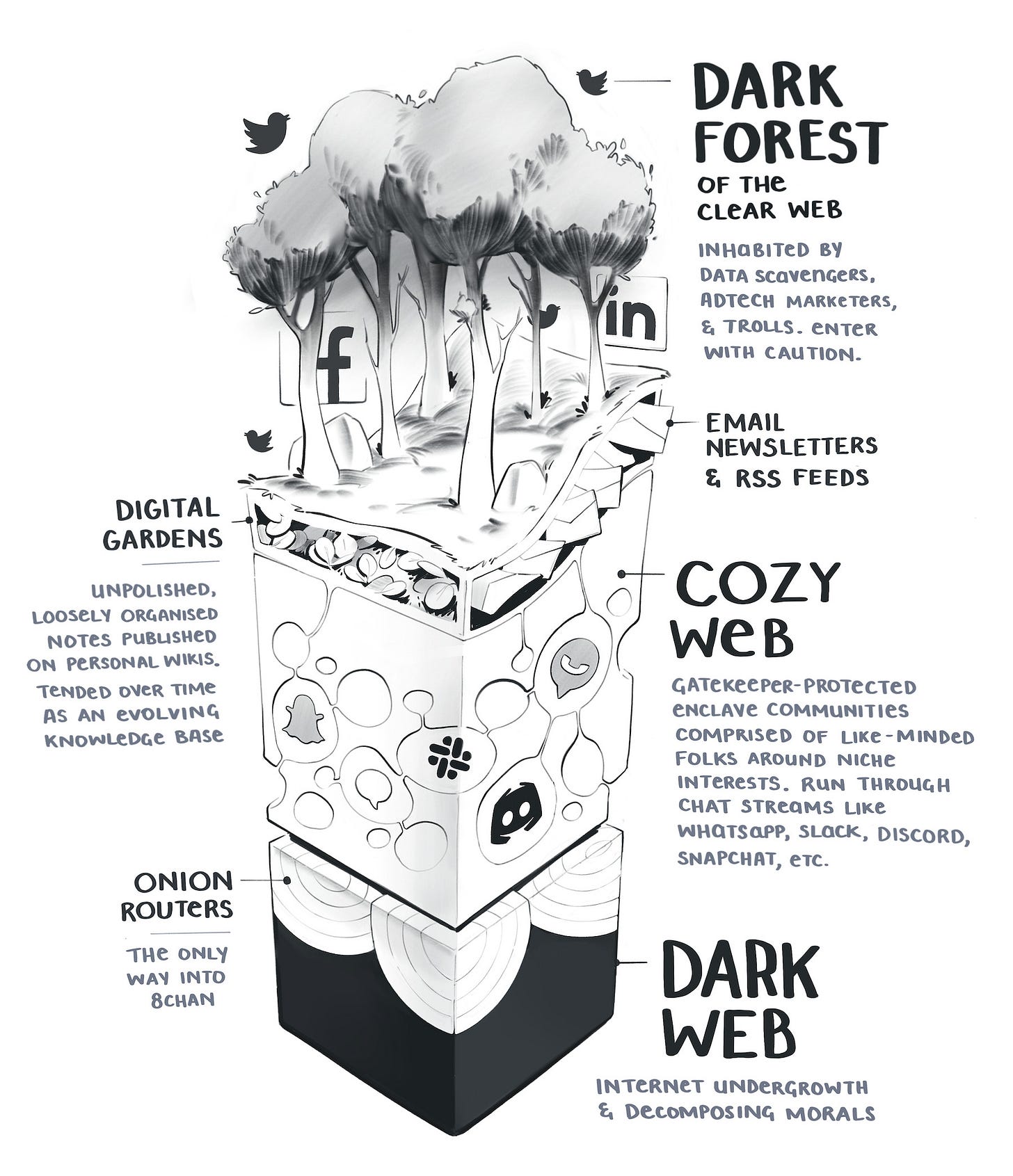

The Internet as a Dark Forest.

To paraphrase Lars, what happens when fake content becomes 100x easier to create?

What happens when every social network is chock-full of bots, drowning your feed in utter gibberish?

What happens when 99% of the people you interact with on Instagram are fake?

What happens when 99% of the people you play chess against online are "fake" humans? What happens when they defeat you within 20 moves every single time?

What happens when every profile you right-swipe on Tinder is a bot that's about to scam you?

What happens when the Internet becomes a never-ending firehose of malicious garbage?

This is what happens: You start logging off the Internet....

... and logging in to more curated, closed communities.

No more talking to fake people on Twitter or Facebook. No more using Google for search.

Instead, everything happens in closed Slack or Discord communities. Invite-only social networks where a curated set of people talk to each other.

Maggie Appleton talks about this scenario, in The Expanding Dark Forest.

The "Dark Forest” is originally a term from astronomy. It’s a hypothesis for why we haven't found any aliens yet, despite searching for decades. First proposed in 1983, it became popular with Liu Cixin's Three-Body Problem trilogy.

Summarizing from Wikipedia:

The dark forest hypothesis is the idea that many alien civilizations exist throughout the universe, but are both silent and paranoid.

In this framing, it is presumed that any space-faring civilization would view any other intelligent life as an inevitable threat, and thus destroy any nascent life that makes its presence known. As a result, the electromagnetic spectrum would be relatively silent, without evidence of any intelligent alien life, as in a "dark forest"...filled with "armed hunter(s) stalking through the trees like a ghost".

In Maggie's view, this is what the internet will look like:

The world has become gloriously open and free over the last few decades. Prepare for it to close down again into a new Dark Age.

But wait, how can we be certain that even in these closed communities, we're talking to humans?

Proof of Human.

I said this on Twitter last week, and I was only half-joking:

But soon, captchas will also become too complicated for the average human to do better than AI. (I myself am already struggling. I just can't identify tricycles in pixelated thumbnail images of crowded streets).

So what would be easy for humans to demonstrate but hard for AI to fake?

Tyler Cowen thinks biometrics are the answer:

One side effect of all the new AI developments is that biometrics will increase greatly in importance.

Captcha tests, for one thing, will become obsolete. To prevent bots from flooding a web site, biometric tests will be a ready option.

Wait, this reminds me of something... no, it can't be... is it even possible... let me just say it:

Do you think Sam Altman created OpenAI and ChatGPT to generate demand for his "Orb" biometrics blockchain?

(Please don't start a conspiracy theory, I'm joking).

In any case, if the above speculations turn true, the world will be a dark dystopia.

Unluckily though, there's another scenario that might be more likely...

... We get sucked into the morass.

So far, we've been talking about all the generated content on only one dimension - its authenticity. But there's another dimension - its allure. Its addictiveness.

And once you think of that, the implication is obvious:

Any content that's engineered to look real will also be engineered to be addictive!

So maybe we'll get sucked into the online world even harder! We won't even have a chance.

So this is what our dystopian robot-ruled future looks like:

Less like the Matrix's unending vaults of human bodies in vats full of viscous fluid.

More like human lumps sitting in front of screens and eating popcorn.

While our robot overlords suck all our energy to make paperclips.

[PS. The above is of course speculative, and more pessimistic than I actually feel. I do think there’s going to be 100x more garbage on the Internet. It’ll be interesting to see how we adapt.]

Before we continue, a quick note:

Did a friend forward you this email?

Hi, I’m Jitha. Every Sunday I share ONE key learning from my work in business development and with startups; and ONE (or more) golden nuggets. Subscribe (if you haven’t) and join nearly 1,400 others who read my newsletter every week (its free!) 👇

2. Speaking of predictions:

This is a great list of predictions from 1923, about 2023.

My favorite predictions:

The Good:

"Life expectancy will be 100 years."

We aren't at 100 years yet, but we're getting close.

"The US will have a population of 300 million."

Not a bad guess! US population is 331 million, up from 110 million in 1923.

The Bad:

"The work day will be four hours long."

Only for Tim Ferriss. The rest of us are slogging away, albeit less than previous generations.

"All people will be beautiful. Beauty contests will be unnecessary as there will be so many beautiful people".

Admire the sentiment, but nope. We still have beauty contests.

The Ugly:

"Newspapers will have been out of business for 50 years."

I wish!

"Women will blacken their teeth and shave their heads."

What even!

The absolutely amazing:

"People will communicate using watch-size radio telephones."

This is so spot-on, it might just be evidence of a time-traveler into 1923! Collared by a surveyor as he was trying to blend in.

3. Golden Nugget of the week.

I read a scary article last week, from Modest Coffee:

How we got grifted by a multi-billion dollar distributor and need to move 30,000 bags of coffee.

Underlines a timeless lesson: A successful company can meet sudden death if it scales too fast.

I’ve seen this happen SO many times in retail, it's honestly so sad.

If I recall correctly, Daymond John of FUBU said that they were always 2 weeks from bankruptcy, during their fastest growth years!

And so many stories in Shoe Dog (the story of Nike) are about receiving a massive order from retailers (yay!). And then going hat in hand to their bankers to plead for cash (not so yay).

It's a tight line to standout success. Between sudden death from too much growth (like a foie gras duck) and the ignominy of saying no to growth.

So de-risk at every step of scaling.

As Shalabh said on twitter, If you bet the house every time, you will eventually lose.

4. We need to talk to LeBron 🤣.

Guess someone needs to tell LeBron what the word "humbling" means.

That’s it for this week. Hope it gave you some food for thought.

While we move slowly and inevitably towards this reckoning, stay safe, healthy, and sane as always.

I’ll see you next week.

Jitha